Upstage has developed remarkable Solar 10.7B LLM model by Depth Up-Scaling technique applied on LLaMA2 and Mistral-7B base models.

As of writing this tutorial on 03.04.2024, Upstage has opened up Solar model (called Solar mini chat) as API, and it is even more powerful model than Solar 10.7B which was open sourced a while ago. This tutorial will walk you through how to get access of the Solar mini chat and how to make a call to that model in Python.

Upstage offers a free trial of Solar API until 03.31.2024, so try it out for free! Even if the free trial date is expired after that, this tutorial should be applicable for paid users.

The workflow of this tutorial

- Signup Upstage developer console

- Exploring Upstage developer console

- Grasp access token for Solar mini chat

- Interacting with Solar mini chat

- Interacting with Solar mini chat with Stream mode

- Conclusion

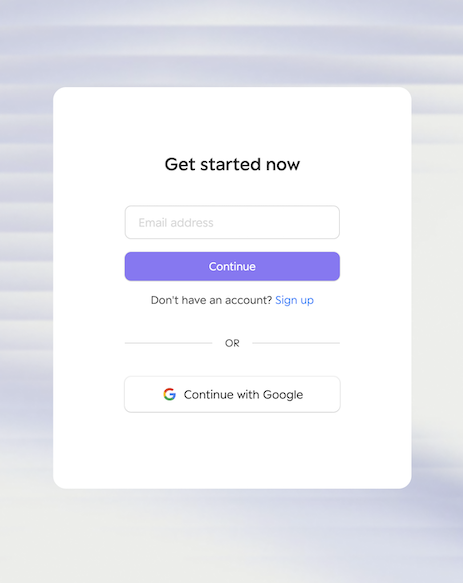

If you already have an account for Upstage’s devleoper console, you can simply skip this step. Otherwise, Go to the Upstage’s devleoper console, then you will see similar screen as below:

Click the Sign up link, or click Continue with Google button if you want to sign up with your Google account. After that, you are all set!

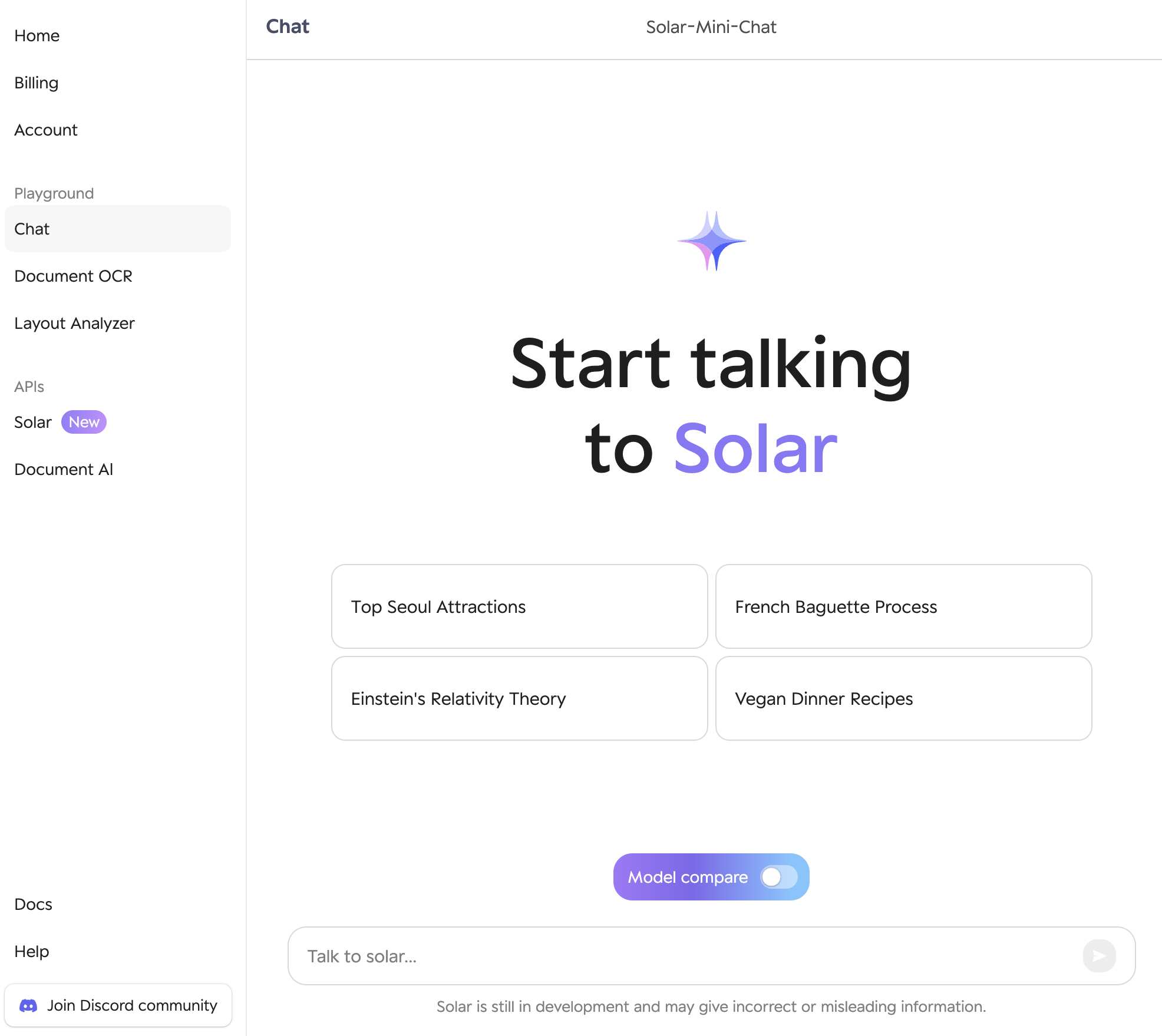

On the left navigation panel, the most relevant menus to this tutorial are Playground / Chat and APIs / Solar. Let’s try out Solar mini chat model with the first menu.

It is very simple chat interface, and it should be looking familiar if you have used ChatGPT, Gemini, or other LLM powered chat applications. Just type anything in the Textbox at the bottom, then you will get the response back.

In my personal opinion, chat interface is a must have toolbox for developers who want to build anything on top of LLM. Experiment a lot to get the prompts that give you the best outputs.

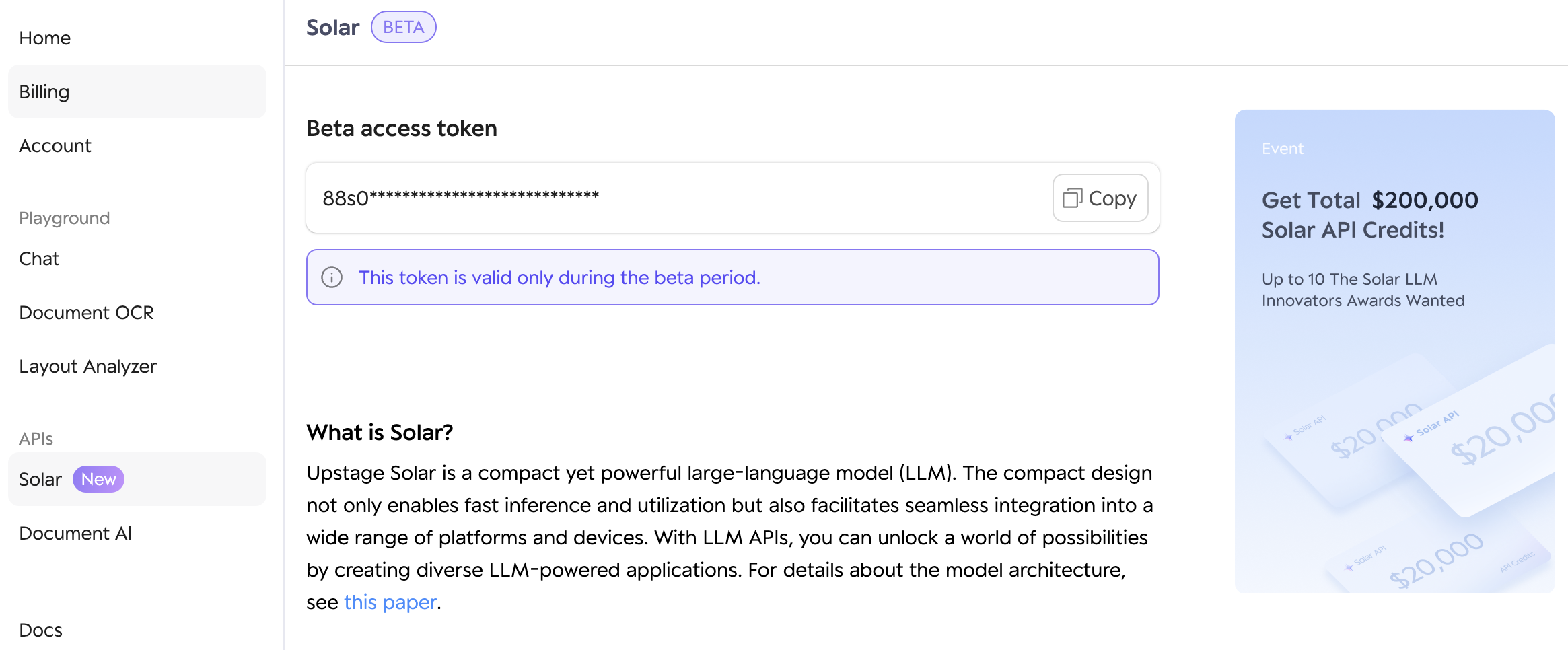

Now, let’s move to the second menu, APIs / Solar. You will see the similar screen as below:

On the very top of the screen, you can grasp your own access token for the Solar mini chat as API. Clik the Copy button, then it will be copied automatically. Also, scroll down the page, then check out the actual model name, solar-1-mini-chat. These two pieces of information will be required to interact with Solar mini chat model as API programatically.

If you scroll down the page, you will notice the other two models,

solar-1-mini-translate-enkoandsolar-1-mini-translate-koen. These two models are fine-tuned variants of the base Solar model that works well on translation between English and Korean.

The API for the Upstage’s Solar mini chat is provided as OpenAI’s API compatible format. This means you can interact with it by using OpenAI’s openai Python package. So, the first step is to install the openai Python package as below:

$ pip install openai

Then, we could easily interact with the Solar mini chat model as below:

from openai import OpenAI

client = OpenAI(

base_url="https://api.upstage.ai/v1/solar",

api_key="<YOUR-UPSTAGE-SOLAR-ACCESS-TOKEN>"

)

completion = client.chat.completions.create(

model="solar-1-mini-chat",

messages=[

{

"role": "user",

"content": "Compose a poem that explains the concept of recursion in programming."

}

]

)

print(completion.choices[0].message.content)

Here are some things to recognize:

base_urlshould points tohttps://api.upstage.ai/v1/solar.- you should insert your own Solar API in the

api_keythat you grasped from the step 3. - the

modelname should besolar-1-mini-chat. If you want to use English-Korean or Korean-English translation model, replace themodelname tosolar-1-mini-translate-enkoorsolar-1-mini-translate-koenrespectively.

Here is the simplest possible Colab notebook that demonstrates the usage.

The above code snippet should print out something similar as below:

In the digital realm, where code is king,

A concept reigns, unique and grand,

Recursion, it's called, a method divine,

That solves problems, a beautiful design.

Imagine a task, a mountain so grand,

Too large to conquer by hand,

...

It is also straight forward to consume generated output in stream mode if you are already familiar with the usage of OpenAI’s openai package.

from openai import OpenAI

client = OpenAI(

base_url="https://api.upstage.ai/v1/solar",

api_key="<YOUR-UPSTAGE-SOLAR-ACCESS-TOKEN>"

)

completion = client.chat.completions.create(

model="solar-1-mini-chat",

messages=[

{

"role": "user",

"content": "Compose a poem that explains the concept of recursion in programming."

}

],

stream=True

)

for stream in completion:

print(stream.choices[0].delta.content, end="")

There are just two differences comparing to the step 4.

- add

stream=Trueparameter to theclient.chat.completions.createmethod - the returned value from the

client.chat.completions.createmethod is a type ofopenai.Streamobject, and it is iterable object. When you iterate it, you can access to the streamed output viachoices[0].delta.contentattribute.

For further detailed usage of the API, check out the OpenAI’s API documentation.

We have gone through how to interact with Upstage’s Solar mini chat model as API. As you experience it, you will quickly notice that it is not only very powerful but also very speedy model due to its compact size ( just 10.7B! ).

Also, it is very nice that the API for Solar mini chat is compatible to OpenAI’s API. This will help us smoothly migrating from ChatGPT to much smaller and cheaper Solar mini model. We can’t be sure it will be as much powerful as ChatGPT on every kinds of task, but it is possible for many certain/small-scoped task oriented business to switch to Solar model.